Transaction log survival guide: Shrink 100GB log

Introduction

You got yourself in situation where transaction log has massively grown, disk space became dangerously low and you want a quick way to shrink it before server stops ?

There are many articles on the net on that theme, but unfortunately many of them are wrong, giving false and even dangerous information. This will instruct you how to do a log shrinking properly, and give you the two methods: quick one that breaks log backup chain, and the one that does not break the log backup chain which is not as quick. But remember, this only buys you the time to learn about transaction log and how to properly set it up. That is your homework after that log crisis is gone!

Investigate the cause – quick!

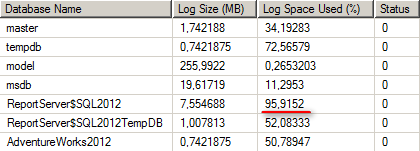

To see the log size and percentage of uncleared log space (active/used log part cannot be overwritten. When log cannot wrap-around and reuse the space marked as “free”, log grows):

|

1 |

DBCC SQLPERF (LOGSPACE) |

Normally, that percentage should be low or close to zero for bigger log files.

There is also a fancier query to get free space in transaction log files:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

DROP TABLE IF EXISTS #used CREATE TABLE #used(database_id INT, file_id int, size bigint, type_desc nvarchar(60), used_pages bigint) -- file_id INT, physical_name nvarchar(400) INSERT INTO #used EXEC sp_MSforeachdb 'USE [?]; SELECT database_id = DB_ID(), f.file_id, f.size, f.type_desc, UsedPages = CAST(FILEPROPERTY(f.name, ''SpaceUsed'') AS BIGINT) FROM sys.database_files f' SELECT [DB name] = ISNULL(d.name, '(ALL)'), [Data File GB] = SUM(CASE WHEN f.type_desc<>'LOG' THEN f.size ELSE 0 END)/(128*1024), [Log File GB] = SUM(CASE WHEN f.type_desc='LOG' THEN f.size ELSE 0 END)/(128*1024), [Log Used GB] = SUM(CASE WHEN f.type_desc='LOG' THEN f.used_pages ELSE 0 END)/(128*1024), [Log Free GB] = SUM(CASE WHEN f.type_desc='LOG' THEN f.size-f.used_pages ELSE 0 END)/(128*1024), [Log Used Pct] = SUM(IIF(f.type_desc='LOG', f.used_pages, 0))*100 / NULLIF(SUM(IIF(f.type_desc='LOG', f.size, 0)), 0), [log reuse wait] = d.log_reuse_wait_desc FROM sys.databases d LEFT JOIN #used f ON d.database_id = f.database_id WHERE d.source_database_id IS NULL GROUP BY GROUPING SETS( (d.name, d.log_reuse_wait_desc), () ) ORDER BY [Log File GB] DESC |

Run this query to see the reason why the log grows (why it is not reused):

|

1 2 |

select name, log_reuse_wait_desc, recovery_model_desc from sys.databases |

The most often cause you will see is “LOG_BACKUP”, so the next steps solve this reason of log growth. If you see other reason, like “ACTIVE_TRANSACTION”, you have to deal with that reason prior to log shrinking (e.g. commit that long-running transaction. Use “DBCC OPENTRAN” to find it). “NOTHING” means log will not grow anymore because it can be reused.

Shrink the log – fastest method

If your log is really big, like 100GB, or you do not have enough time or space to backup your 100GB transaction log, you can use this very fast method. But, be aware that it breaks the log backup chain. That means you will lose point-in-time recovery ability in time period between last successful log backup and end of the next differential backup we will take here. Point-in-time recovery to time after backup will be possible again. This method should take no more than one minute, no matter how big your log is.

First, execute sp_helpfile to find the log name, and use it here:

|

1 2 3 4 5 6 7 8 9 10 |

-- This breaks transaction log backup chain. -- No point-in-time recovery after this! alter database MyDatabase set recovery SIMPLE -- Only in SIMPLE recovery CHECKPOINT initiates log clearing process CHECKPOINT -- marks VLFs free for reuse -- Once cleared, you can shrink the log file. -- 1000 MB, Choose anything you like but not too small ! DBCC SHRINKFILE(2, 1000) |

Done! Your log should now be shrinked to about 1GB.

Now put your database back to full recovery model:

|

1 |

alter database MyDatabase set recovery FULL |

Although sys.databases will now show that database is in full recovery model, it is actually still in SIMPLE recovery until full or differential backup is taken! We will enter full recovery model and initialize log backup chain by taking a differential backup because it is much much faster than full backup:

|

1 2 3 |

--full recovery model will take effect only after a backup backup database MyDatabase to disk='D:\mydb.dif' with differential, compression, stats |

Shrink the log – without breaking the log backup chain

If you do not want to lose point-in-time recovery ability (you don’t want to break the log backup chain), and you have the time and space to backup your 100GB log file, or log is not really so big – this is the method for you!

Execute the job that takes transaction log backup and wait for it to finish OR do it yourself with this command:

|

1 2 3 |

-- change the file name on each execution! BACKUP LOG MyDatabase TO DISK='D:\transactionLog1.trn' WITH COMPRESSION, STATS |

That first log backup will take a VERY long time because almost all 100GB must be copied. Subsequent log backups will be very fast and very small even if the log file itself is still 100GB!

Use “sp_helpfile” to find the log name and use it in this shrink command. Do not shrink to less than it’s normal operational size (do not truncate to zero!):

|

1 |

DBCC SHRINKFILE(2, 1000) -- Shrink to e.g. 1000 MB |

Repeat this 2 commands (log backup to filename2, shrink, log backup to filename3, shrink, …) until the log is shrunk. Usually 1 or 2 cycles are enough.

Be careful to change the filename in each log backup iteration!

Phew, log is small again. What now?

After your log is back to manageable size, do this:

- Ensure your log backup job is set and is functioning properly.

- Optimize the initial log size, growth, and number of VLF’s.

- Read and learn about transaction log

fantastic post! This is so chock full of users information and the resources you provided was helpful to me. There I found a very informative blog also describing reason why log grow and how to prevent this. You may have a look at this URL:

http://www.sqlmvp.org/transaction-log-is-too-big-or-growing-unexpectedly/