8 Myths about Storage Spaces

Introduction

EVERYBODY should read this!

Whether you have a big database that needs speed, or other valuable data like documents or just personal pictures you do not want to lose when disk fails (disks DO fail without notice, just a matter of moment), you should read this post.

There is a hidden gem in Windows OS you can leverage for free. Just not many people know about it and how big value can it bring to you. Read on and watch the demo in video.

Benefits

What benefits can Storage Spaces give me?

Well, if you do not have Windows OS, and you have only 1 disk, then none. For those having or planning to have at least 2 disks (plus OS drive) it can:

- Increase the speed of your local storage. As multiple disks work in parallel for better resulting speed.

- Get resiliency to failure of a (one) disk. Or two failed disks if you choose three-way mirroring. That can save you from losing your data if you do not have a backup (you should have a backup). Or from ugly downtime caused by a disk failure.

- Consolidate many DIFFERENT disks into only few big, fast, resilient virtual drives, taking less drive letters.

- Ability to expand the storage capacity and speed by adding new physical disks, without changing ANY application or database settings – transparent to any software you use, no config changes needed in them, they will just see an “old” drive drive just got bigger and faster.

- On SAME physical disks you can have a fast “simple” (striping) virtual disks for temporary stuff, eg. temp db database, scratch disks, temp folder, and at the same time, over same disks you can create resilient “mirror” or “parity” virtual drive which protects your data from at least one disk failure. You can eg. place more important data to that virtual disk which is a tiny bit slower, but resilient to disk failure.

- Cloud Servers – have disks which are mirrored anyway. So just create a “simple” (striped, RAID0) virtual disk across all of drives and enjoy phenomenal increase in speed without losing capacity.

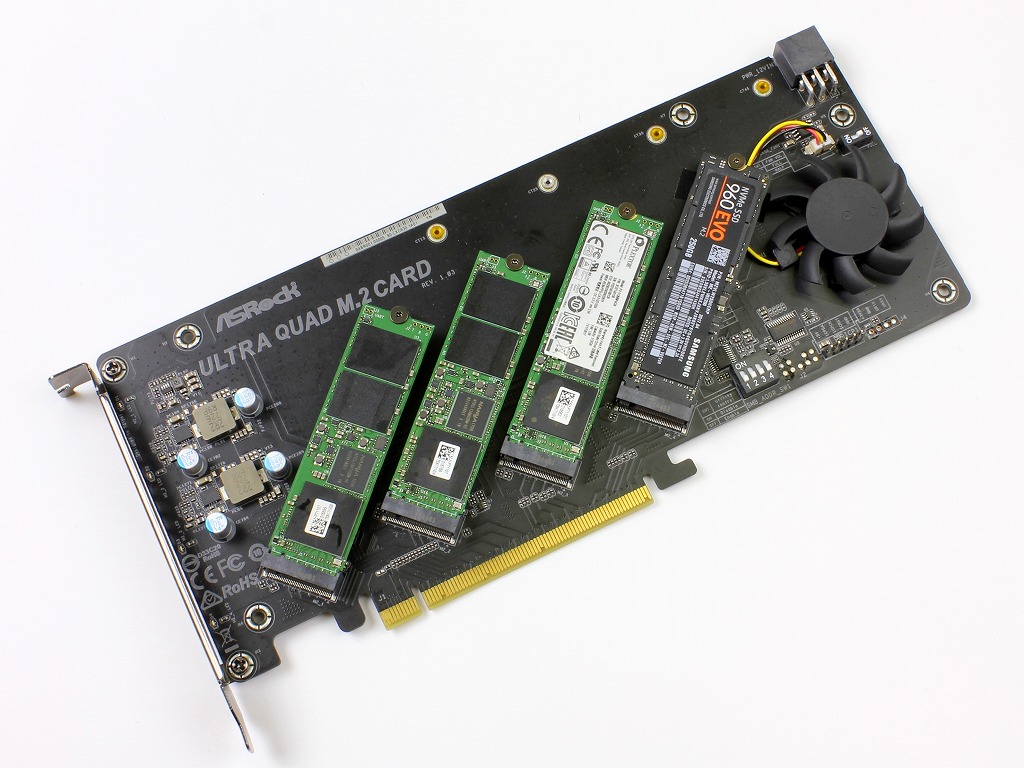

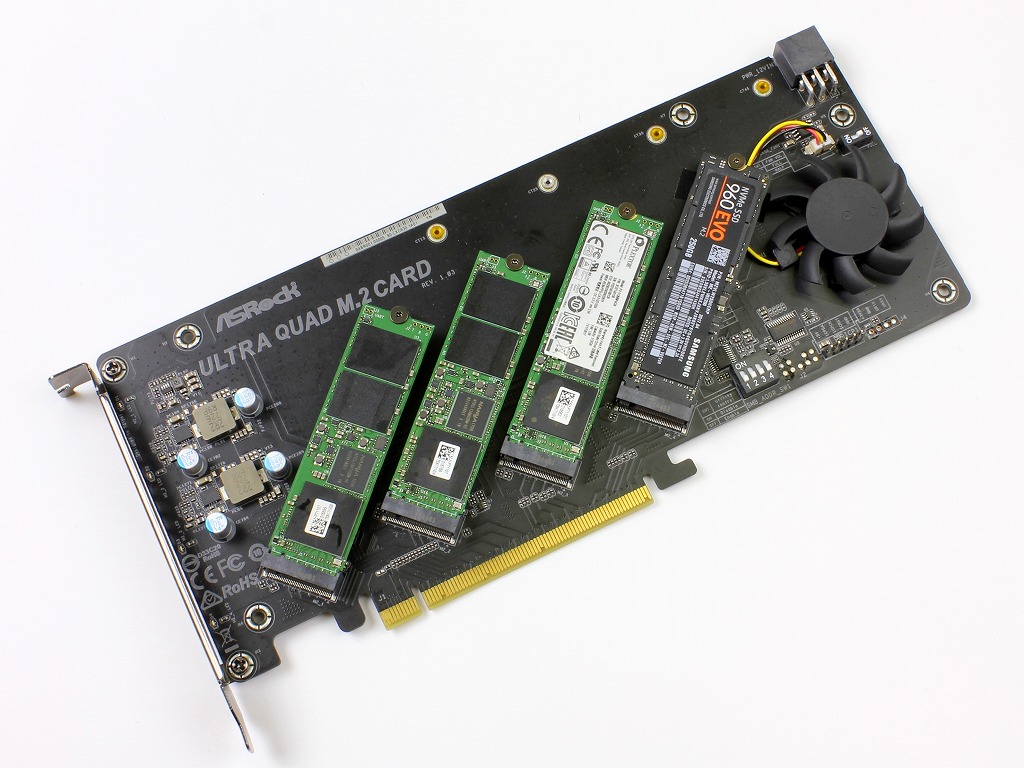

If you need speed, today’s normal, affordable M2 drives reach 3200 MB/s and 500 000 IOPS easily, just one individual drive, eg. WD BLACK SN750. One can use a PCIe card like this one, that measures 10 000 MB/s (yes, 10 GB per second – watch test here!) write and read speeds! Insane. And you can have 4 such cards if motherboard permits, for total of 16 M2 disks:

Filled with 2TB M2 drives, that would give you 16×2 = 32 TB of space with totally insane speed of 40 GB/s!!! In theory at least.

UPDATE 2019-12-05: In practice, this card won’t work if your MB is not on it’s “supported” list, or your MB does not have “x16 = 4 devices x4” option, or your CPU/MB does not have enough free PCIe lanes. That means, it won’t work in most cases unless you combined all components very carefully.

Terminology

Storage Pool = set of disks (physical). Disks can be very different in size and type. We will create virtual disks on this set of physical disks.

Storage Space = Virtual Disk. Created on set of physical disks (Storage Pool). You define size and parity of the Virtual Disk.

Volume = partition on virtual disk. Can have a drive letter assigned.

Storage Spaces = Microsoft’s technology to turn local disks into arrays of disks, building virtual disks on them. Kind of software RAID. And works very, very well.

Storage Spaces Direct = completely DIFFERENT from Storage Spaces. Different technology and purpose, but similar name to confuse people. Like Java and JavaScript. While Storage Spaces is about arrays of local disks, Storage Spaces Direct is like a Virtual SAN: multiple machines in a cluster present their local disks as one big, resilient SAN storage. Requires very fast network between cluster nodes to be efficient. Multiple machines acting as one storage. While Storage Spaces is much simpler technology turning local disks of one machine into arrays of disks.

8 Myths about Storage Spaces

Whether in form of a myth (We cannot …!) or a question (Can we…?), here are 8 interesting things about Storage Spaces:

- Storage Spaces work only on servers OS, it cannot work on Windows 10.

NOT true! It works on Win10 quite nice.

- With only 2 disks, can you have both mirrored and striped virtual disks at the same time?

Mirroring = resiliency, Striping = speed and capacity. Yes we can.

- Adding disks – if we create pool with 2 disks initially, we cannot add just 1 disk, we must add “column” number of disks we initially had (in our case 2).

NOT true! We can add 1 disk or as many as we like.

- Adding disk – does NOT increase speed, only space.

It adds speed too! But in server OS you will need to run Powershell command “Optimize-StoragePool -FriendlyName <YourPoolName>” to achive that.

UPDATE: Sadly, this myth seems true (thanks Dinko!). “Optimize-StoragePool” only spreads data evenly over disks, so new disk gets data too. I got faster speed because I added a faster drive to the Pool. If you add a same-speed drive, speed will not be faster. It is determined by the number of “columns” – a number of disks used in parallel (does not include disks for redundant copies of mirror, but for simple and parity includes all disks, even parity disk). When creating a Virtual Disk, you can specify number of columns only through PowerShell, and it is NOT changeable after you create VD! Adding a drive to the pool does not increase columns of VD. To increase columns, you would need to create a new Virtual Disk with more columns, and copy data to it. Which is a real BUMMER! Microsoft, are you listening? We need ability to easily increase columns – to get speed with adding new drives, not just boring capacity!

- Expanding virtual disk – is it difficult, requires reboot?

Super-easy, few clicks, online.

- Shrinking virtual disk – is it possible?

Unfortunately not possible. Expand when you need, but do not over-expand too much, and do not expand too often. Each expand seems to take some space (around 250MB).

- Removing disk – Can you remove the disk that is used in virtual disks, and has data?

Sure we can, but we need to “prepare it for removal” first, to drain data to other disks.

- Failing disks – can mirroring/parity in SS really protect your data when a disk crashes? It calls for a test of disk failure!

Yes, it protects your data from 1 drive failure.

The Demo

You can see all of that “in action” in this video:

(Music from https://filmmusic.io

“Verano Sensual” by Kevin MacLeod https://incompetech.com

License: CC BY http://creativecommons.org/licenses/by/4.0/)

UPDATE 2019-08-01 About Myth 4 – Adding disk on Server OS – IMPORTANT!

After adding new physical disk, we should “Optimize” or spread the data over all disks (some call it “rebalance”) to enjoy increase in speed spread data evenly across drives. If you don’t, you might get “disk full” error message if individual disk gets full, even if other disks in the pool are empty and pool has a ton of space, because Virtual Disk allocates <column count> disks at once, and that many drives need to have enough free space for the growth step to succeed. In video at 9:09 you can see Windows 10 has that “Optimize” checkbox. But server Windows have quite different GUI and that checkbox is missing. So how to invoke “Optimize” after adding new disks to a pool in server OS?

Powershell command “Optimize-StoragePool” to the rescue! As described here and here, you might also have to run “Update-StoragePool” if pool was created before Windows 2016.

|

1 2 3 4 |

Get-StorageJob # Which jobs are currently running? Get-StoragePool # List storage pools and physical disks (disk outside pool = "Primordial") #Update-StoragePool -FriendlyName "YourPoolName" # If created before Win2016/Win10 Optimize-StoragePool -FriendlyName "YourPoolName" # Rebalance, spread data across all disks. Win2016+ |

“Storage Spaces Direct automatically optimizes drive usage after you add drives or servers to the pool (this is a manual process for Storage Spaces systems…). Optimization starts 15 minutes after you add a new drive to the pool. Pool optimization runs as a low-priority background operation, so it can take hours or days to complete, especially if you’re using large hard drives.”

Summary

If you have, or plan to have, more than 2 drives, leverage Storage Spaces to get simpler disk management, faster disk speeds, and resilience to disk failure. It is free and very, very efficient. I personally used Storage Spaces to saved some very big database servers in the cloud (Windows 2016 server OS) that suffered from serious disk issues. After applying Storage Spaces, disk problems went away, they got the speed they needed.

Useful Links

- Capacity Calculator for Storage Spaces – what disk size to buy when expanding capacity to keep entire space usable?

- “Storage Spaces FAQ” from microsoft.com – very good, answers many questions.

- “Understanding Storage Space Internal Storage” from itprotoday.com – tries to explain columns and interleave

- “Dell Storage with Microsoft Storage Spaces Best Practices Guide” from dell.com – very detailed, also click on their other pages that explain Interleave, Virtual Disks – excellent stuff, a must read!

- “Storage Spaces Demo” from IT Free Training – near the end explains tiering.

Hi Vedran!

Nice article! Difficult to find such summarized article about Storage Spaces on the Internet. Please explain a little bit more on Myth #4. You said that by adding physical disks you also increase speed. In my experience you have to recreate virtual disk to utilize additional number of columns because – more columns, more speed. It is not possible to increase number of columns without destroying virtual disk so just by adding physical disk it will not automatically increase speed. Please clarify on what did you have in mind…

Thanks Dinko for the comment!

At 9:09 in video while adding new physical disk you can see a checkbox “Optimize drive usage to spread existing data across all drives”. In server OS (eg Windows 2016) a GUI for Storage Spaces is quite a bit different, and as I remember it does not include that checkbox. Maybe there is a way to do that in server OS (hidden in GUI, powershell)? Without that “spread the data” step, it is logical not to see increase in speed when adding new drives.

There is Optimize-StoragePool powershell cmmdlet in Windows Server 2016 that according to Microsoft documentation that does the following:

“The Optimize-StoragePool cmdlet rebalances the Spaces allocations in a pool to disks with available capacity.

If you are adding new disks or fault domains, this operation helps move existing Spaces allocations to them, and optimization improves their performance. However, rebalancing is an I/O intensive operation.”

I just did a performance test on my Windows Server 2016 (14393.3085) with 1 virtual disk (mirror, 1 column, 2 physical disks in the pool) before and after adding 2 additional physical disks. Storage pool was optimized after adding physical disks which rebalanced the data across physical disks. However, I have measured the same performance before and after. Also, it did not change the columns number for the virtual disk. But I still think that statement about performance increase is correct because if you have multiple virtual disks in the same pool, unbalanced data spread could stress some physical disks more than others. If you have only one virtual disk in the pool, there is no difference.

Thanks Dinko for your valuable insight and measuring. It is a sad thing to say we do not get speed by adding disks (except maybe a little due to rearranging of data). Because adding a new Virtual Disk with more columns, copy all data, remove old VD – is a real bummer and a downtime.

I hope Microsoft will do something about it, to give us ability to increase column number without wasting double disks and a huge downtime. I corrected the post according to your findings – thanks again!

Great summary!

I am a photographer using storage spaces on an external drive that is networked through our main computer. Love how easy it is.

I just discovered that I could not expand a storage space past a ceiling created by cluster size (the file system has a maximum size) … I created my storage space of 10TB because at the time I didn’t need more space and I didn’t have more discs in the enclosure – and the storage space dialog just says add more drives when you need to. The cluster size ended up at 4 kb which gave me a maximum of 15.99 TB in the volume, if I try to make it larger than that it doesn’t let me.

I found the following info from a user on Reddit …

Cluster size – max volume

4kb – 16TB

8kb – 32TB

16kb – 64TB

32kb – 128TB

64kb – 256TB

This means that if you know you are going to eventually want your virtual drive to be 30TB, you will want to initially create your storage space at more than 16TB even if you only have 2TB of physical drives to start. The initial volume size sets the cluster size.

One thing you didn’t mention that I saw in some Storage Space Direct documentation is the best practices of leaving unallocated space in the storage pool, that way if a drive dies windows will start using the unallocated space to rebuild in the new drive. So for my set up I have 6 6TB drives in the pool, I am using 2 mirror parity so I am looking at a storage pool of 36TB with a 15TB volume (mirrored parity = 30TB of data) which leaves 6TB of unallocated which is equal to the size of a drive. Haven’t seen this best practices mentioned for Storage Spaces and don’t know if it will rebuild, but thought I would ask 🙂 … Windows 10 documentation for Storage Spaces is slim!

Thanks!

Hi Roger,

Virtual disk is “thin” provisioned, right? Because fixed-provisioned cannot grow. I think for a 2-way mirror you need to add at least 2 disks to be able to use their space. Run “Optimize drive usage” to help redistribute drive usage across existing disks and get some space from that. You mentioned it is a good practice to leave space of at least one disk – agreed.

You also need a backup if data is important. I usually use main storage which is array of local disks in simple (striping) mode which is fast but prone to data loss, plus a backup on external storage eg. Synology. Backup configured in a way to be resilent to crypto viruses, like this: https://blog.datamaster.hr/crypto-virus-resistent-backup/

Hi Vedran,

Great article and even though few yrs old still holds the truth. We are starting to use storage spaces more for BDR server and adding redundancy without expensive hardware RAID controllers is a nice bonus.

One question regarding “good practice to leave space of at least one disk” – Should this be at least 1 LARGEST disk in the tool?

Eg.

Thin Provisioned, Mirrored total: 5TB

Disk1: 2TB

Disk2: 2TB

Disk3: 4TB

In this case, if the Disk 3 fails there isn’t enough room left o Disk1 and DIsk2 to hold the 5TB (assuming almost all is used or 4.5TB at least). How would this play out with respect to data loss?

Thanks again!

Hi. On why it is a “good practice to leave space of at least one disk”?

Because, if one disk dies, a rebuild can immediately start by using that sparse capacity.

It is like having a spare drive in a storage ready, for a case if one drive fails that rebuilding the volume starts immediately with that drive included.

That shortens the amount of time we spend in “no protection” state.

Which disk to put as a “spare”? The biggest is ideal, less ideal is the smallest, because the smallest can cover only for smallest drive failure automatic rebuild.

You could also be without any spare space and in that case rebuild of protection will start once you put a fresh disk there.

Thank you for your follow up.

I hoped that it would follow the path of hardware based RAID but just wanted to confirm. It is little easier to relate to a designated physical spare disk in a RAID array rather than ‘some extra space’ acting as “spare drive”.

Cheers!

With this logic, might as well go for double parity from the very beginning.

(Used to only be supported on server OS’s, but might have changed since I played around with storage spaces many years ago…)

Even if a single parity config with enough spare space to automatically rebuild and regain redundancy in case of single disk failure, there will be a window of no redundancy during the rebuild.

With that said, great article! And valuable reader comments too!

I’m planning to migrate from my current mechanical setup to a SSD setup.

Landed on your article since I’m curious how much performance hit storage spaces with parity would have on a fast SSD setup.

I’m about to upgrade to 10Gbit at home, so 1250MB/s r/w with some speed to spare is a requirement.

I might check in again with results after some testing 🙂

HI,

was wondering if you found or know about a way to monitor the Storage Tier usage within a virtual disk.

i.e. if my VD is total of 300GB

performance tier is 100 GB and capacity tier is 200GB

then i would like to know how much is used from the 100GB.

I haven’t experimented with tiered storage yet, but I guess if you explore powershell objects around tiers, you might find properties that show you tier usage information. Maybe somebody else who reads this can answer more precisely.

Hi there,

I found out the SS created in Win10v1809 has worse writing disk performance compare SS created in Win10v1607.

for comparison:

SS created in Win10v1607 benchmark: 330MB/s

SS created in Win10v1809 benchmark: 240MB/s

*both benchmark on the same platform & hard disk

*The benchmark tested on Win10v1697 and Win10v1809

Jemiruddin

Hi,

Speed highly depends on number of “columns”, which means, number of disks accessed in parallel (simultaneously). Column count is set at creation of each Virtual Disk, and cannot be modified. It also cannot be more than number of physical disks. Your situation looks like you had 3 columns before and now you have 2 columns. Maybe it is a default that changed in higher version, if you have not specified it explicitly, and that caused the slower speed (I usually specify it using powershell to create VD). Check column count with this powershell command:

Get-VirtualDisk | select FriendlyName, ResiliencySettingName, Interleave, NumberOfColumns

Hi,

Configuration for both SS created in 2016 & 2019 exactly the same. No different at all. Just one create in Win10v1607 another one in Win10v1809

If you checked number of columns with the given powershell command, and it is the same as before, then I do not know the reason. If you created SS using the GUI (not powershell) then in new windows versions default number of columns for “simple” VD is changed and is 1 less than number of disks, 1 less than previously was default. That would give you a slower speed exactly as you described. And can be corrected by creating SS with explicitly specifying num of columns, not relying on default.

The SS created in powershell. Which I already save it as ’15colunm2parity.ps1′. Basically, the configuration no difference at all.

Thanks Jemi for the report. I don’t know what is causing this if the number of columns (you have not provided that though) is the same as before, and everything else except version is the same. Same script can (and will) result in different configuration options on different versions (eg. they will result in different default column count). If resulting configuration is exactly the same then really it would be a version difference and you should file a bug to Microsoft. It is a considerable slowdown, really big difference. In “simple” layout, with column count = disk count, you should get resulting speed about the same as sum of all single disk speeds. Eg, if each disk alone gives 100MB/s, with 3 disks you should get around 300 MB/s.

Hi Vedran.

I am planning to purchase an OWC Express 4M2 4-Slot M.2 NVMe SSD Enclosure to use as a fast external (thunderbolt 3) storage for video editing. It is basically the same as your AsRock quad card, but external (and not as fast, of course.)

My question is, will I be able to simply connect this device with a Storage Spaces RAID pool to another windows computer and work with it? In other words, is the pool tied to the machine that created it?

UPDATE 10.7.2020. It should work if individual disks are visible from the PC: “Storage Spaces records information about pools and storage spaces on the physical disks that compose the storage pool. Therefore, your pool and storage spaces are preserved when you move an entire storage pool and its physical disks from one computer to another.” from https://social.technet.microsoft.com/wiki/contents/articles/11382.storage-spaces-frequently-asked-questions-faq.aspx

Hi Žarko! Thanks for being active and asking. The question is – if your ultimate goal is to speedup video editing, is storage really your biggest bottleneck? If you use Premiere, maybe much faster process is using Adobe Media Encoder to convert all source videos to near-lossless QuickTime “ProRes 422 (HQ)”. It is fast for editing. Press “Render” whenever you go to pause. For export use “match source” and tick “Use previews” to export to “ProRes 422 (HQ)” with super-high speed. Then use Handbrake for quick conversion into H264/265 (MP4). See here: https://www.youtube.com/watch?v=AT8sU0MyncA

Answer to your original question: To be honest I haven’t tried. I returned my ASRock card because my motherboard had limited number of PCIe lanes (16 is “norm”) and almost all were already taken, therefore only 1 NVMe was recognized and others ignored because of lack of PCIe lanes (internal “bus” connections) on MB. If your computer sees individual NVMe drives in that enclosure, and you set Storage Spaces in certain order of disks, you would have to setup same Storage Spaces in other PC in the same order of disks. I think it is very risky, likely to corrupt data and probably unsupported.

But here is the good news: Good NVMe drives are fast enough to use them as individual drives inside this box, no storage spaces are required. Then you should be able to move this box around.

IMHO, laptops are generally slower than desktops, and are not the best tool for editing video, unless you really need portability. The fastest would be desktop filled with SSDs and NVMes into storage spaces, and very fast graphic card and CPU.

But aside hardware, this described video editing technique gives you really fast way to edit and export videos, even on laptops.

Unfortunately, the box in question does not have x4 bandwidth for each m.2 slot, therefore the need for RAID. Otherwise, the throughput is capped at around 700 MB/s for each populated slot.

My current workflow is exactly as you suggested, and it worked just great until recently when I got a new contract that will not let me have the luxury of time for proxy rendering. I mean terabytes of video footage weekly.

For this reason I am getting a powerful new workstation that will be able to crunch the source files (in multiple layers). This thunderbolt box would be a nice bonus, eg. for sharing the project with my colorist – that is why I asked if it could be transferred to another machine. I would not use it as a primary working unit since internal drives in the workstation will go up to 5GB/s.

Try Handbrake. In my case it encodes 60x faster than Adobe Media Encoder, and I’m ok with it’s limitations on resulting format (mp4) and resolution. Smaller, lower-res files are faster to manage. And can be replaced in your Premiere projects by right-click original file->Replace Footage. Then you can select any file, but at least fps should match. Editing and even colorist might work with this smaller files. On render you switch back original files. It is like a proxy method, but more flexible.

I know all about Handbrake, but I am trying to avoid any proxy rendering, hence the new workstation. I am not new here, been working professionally for the past 20 years. Mostly, smooth editing with source footage is not about hard drive throughput (only for red 8k and similar) but about CPU crunching power. And while I (the editor) can work with proxy files, the colorist cannot. He has to work with the original footage. BTW, when encoding into the same kind of file at same bitrate, Handbrake is about 15-20% faster than AME, and it also produces a gamma shift with certain type of footage which is unacceptable.

Thanks for the info! I’m not nearly as proficient in video, only as much as I need to record SQL Server videos. Can you please share your findings from disk test here? Then many people can benefit. Thank you!

Now I read about device you mention, and it seems it has it’s own software for combining disks “OWC SoftRAID”. If you install that same software in two computers, I believe it should work, you should be able to switch computers. They mention Storage Spaces only in situation where one enclosure is not enough. You would buy eg. 2 such enclosures, fit there 8 M2 SSDs, and combine these two using Storage Spaces. That probably would not be “movable” to other computer, but I think you need only one box with 4 SSDs so you should be good. But I haven’t used that device so it is safest to ask their support or someone who has it.

SoftRAID unfortunately currently works only in Mac OS, and this product is targeted for Macintosh users. They were mentioning windows beta tests, but I haven’t seen anything in the news lately.

That means on windows you see just 4 individual drives until this software comes out. One risky way, that can result in data loss, and maybe does not work is this: When you join a drive to Storage Pool, data is erased. Therefore, while drives are empty, join all drives to a storage pool on one computer, unplug and plug to other computer, join all drives to a storage pool there. Create a Virtual Disk, put some files there. Unplug, and plug into first computer. I don’t think it will work (Virtual Disk be visible), but you can try. This post gives hope that it might work, because config data is written onto pool itself: https://serverfault.com/questions/550136/can-storage-spaces-drives-be-moved-to-a-replacement-server-when-there-is-a-failu

That is an interesting idea. I will try it beforehand using my dual hard drive dock. Thanks.

Žarko, one more thing you can do IF the colorist is in the same local network (same building?): a NAS storage. Bump the RAM inside, and buy 10Gbit card if higher speed is needed. But these Video editors claim 1Gbps is fast enough to edit even 4K meterials directly from NAS, and enables simultaneous work for multiple editors and colorists on the same files: https://www.youtube.com/watch?v=4jgEHyx3Kp0

And this: https://www.youtube.com/watch?v=U_XBGD12veI

DS1819+ gives around 400-500MB/s over 10Gbps.

Even if you are alone in the building, I highly recommend getting a NAS, even over 1Gbps network. It is great for backups and many more things.

Unfortunately my colorist is off-site, and while I have a sufficient internet bandwidth to send it to him online (500/250Mbps), he is still on ADSL. Funny you should mention Synology, as I do own one DS1815+ in RAID6 environment, which was a godsend. It is the single most useful device I have ever bought. I see that you are a tinkerer, maybe this will tickle your fancy: just google for xpenology project.

I need some advice regarding Windows Storage Spaces. If i have several small drives in the pool and then add a big drive, will i be able to use all the space on the big drive? I want to set up the pool with parity

For a given set of disks, a VD max capacity depends on the column count you choose and type of resiliency (simple, parity, 2 way mirror, 3 way mirror). I’m not aware of any formula or official way to find a physical disk footprint for a thin provisioned VD when his max capacity is reached. If your column count is 3 which is smallest (2+parity), and you have disks 100GB, 200GB, 300GB and then add 1000GB disk to add space, I think your max capacity is 200GB capacity taking 300GB space on first 3 drives, then 200GB taking another 300GB space on 2nd-4th drive. Total max capacity is 400GB taking 600GB of space, leaving 100GB free on 3rd drive and 900GB free on 4th drive. Not nearly close to utilizing all available disk space. It is just my assumption on how it works, and needs to be tested. You can check current footprint on physical disks via powershell, and also an “optimize” VD command which redistributes data more evenly across the disks, eg. after you add a fresh drive. But that won’t move your max capacity limit. The only way I can think is to add more big drives (ideally to reach 3 big) so they can be fully utilized, OR create another VD with 2-way mirror with 1 column that would be able to use remaining space. Even 2-way mirror cannot use entire space if you have 2 biggest disks of unequal size. Only 1-column simple VD would always utilize entire space. Bad has no resiliency and is not very fast (because only 1 column).

Thanks for this excellent article.

I have some perhaps basic questions.Both are based on a two disc, two way mirror arrangement. I want to set this up so I can store all my photographs in duplicate so if one Drive fails, the photos are still safe.

1. If I copy a photo (or any file) to the storage space formed by the 2 drives, am I correct in saying that the software essentially duplicates it for me automatically – sending one copy to one drive and one copy to the other?

If so:

2a: Will programmes “see” both copies, or does windows automatically keep one hidden. For example, I copy a photo named X to my storage space. Storage space makes two copies; one on each drive. I then use photoshop / lightroom / windows gallery to open photo X. In each situation, will only one copy of X be visible to the software?

2b In the event one drive fails, will all programmes that reference a given file automatically start “seeing” the 2nd copy? For example, I use a programme called Adobe Lightroom classic. Within LRC you import photos by telling LRC where they are on a given drive. LRC doesn’t move the photo, it just knows where it is. In a single drive situation – if the drive breaks or is unavailable, LRC can no longer “see” the photo and tells you it is disconnected. With a two way mirror, would LRC automatically “reconnect” to the copy without intervention. For example, I import photo X as per 2a. Assuming the answer to 2a is “yes” and only one copy is visible, I reference file X in LRC so LRC knows where it is. If a drive then fails, will LRC automatically “see” the 2nd copy of X. I’m using this specific example to ask a wider question that I’m sure is applicable to many situations: If two copies of a file exist, and the answer to 2a is “yes” (only one is visible to software that accesses the drive) then does the storage space software automatically begin “projecting” the 2nd copy when the first copy fails automatically? I.e. do programmes using the file in question carry on as if nothing happened? I.e. is windows managing things so IT knows two copies exist, but make it so as far as users are and software is concerned, both copies share a single file path?

2c If one drive fails completely and needs to be thrown away, If all above stands, my files are safe on the 2nd Drive. But then, I would want to buy a replacement drive to repair the system such that once again my files are in two places. How simple is this? If I plug a new drive in, will windows automatically / can be told to use that to restore the original two way mirror? Or do you need to start again?

Thanks again,

Dave

Welcome David! When physical disks are joined to a Storage Pool they become “hidden” to all programs. Only Virtual Disks are exposed and visible to apps and they appear as “normal” disks would. The internal structure is hidden – apps do not know that there are two or three copies, or 8 disks are working in parallel, apps see only a virtual disk as a normal disk. In a 2-way mirror or parity, if one disk fails, no data is lost and the system continues to run as nothing happened. You will get a warning in Storage Spaces Manager, and you should replace the failed disk as soon as you can, because if another drive fails before you replaced the disk (and virtual disk rebuild finished), then data would be lost. Replacing drives is very simple. The only tricky part is to physically remove only the failed drive, and not the healthy one. But even if you make a mistake and remove a healthy drive. But even then I think no data would be lost, you would just have temporarily unavailable virtual disk(s) until you return that healthy disk.

Thank you very much, very clear and very helpful

Best wishes,

Dave

Hi Vedran, I am using a 4 disk 2 column mirror (4x10TB for 20TB capacity). I understand that to expand, I must add disks in multiples of 4. My question is: Can I add another 4 drives to my pool, for example 4x8TB drives, without having wasted space?

Hi Brice! Yes, you will be able to use entire capacity if you add 4 disks of 8TB. Or any size – as long as these new 4 are equal size and can be different from first 4. By adding 4x8TB you will get additional 16TB of capacity. By the way, here is a post about how to calculate max usable capacity of storage spaces, and even downloadable code of a SQL Server stored procedure (TSQL, could be rewritten in any language) here: https://blog.datamaster.hr/capacity-calculator-for-storage-spaces/

Video is already recorded too, and will be published in several weeks.

By the way, if such disks would exist, you could also add only 2 drives of double size than your first 4 drives. That is, 2x20TB. That would also use entire space without any leftovers. Total capacity from 4x10TB+2x20TB for 2-column mirror (stripe is 4 disks) would be 40TB.

Thank you for the clarification! Very well written article. I’ll look out for the video.

Optimizing is especially necessary if you use Tiered Storage Spaces which uses the faster drives as cache for the slower drives. The optimization process shifts data on and off the faster drives dependent on how often it is accessed.

I really liked that technology until my storage went all solid. It can even shift data blockwise, so if you have a large database and only access a part of it that part might get switched to your M.2 instead of moving the whole database.

For a personal user it is a bit more complex to create Tiered Storage Spaces as Windows 10 doesn’t officially support it, only through console, but with programs and games growing this could shift your often accessed assets like textures or Photoshop plugins to the faster storage while leaving the majority on slower ones.

Thank you for this article.

S2D seems like a really viable option for affordable storage. We tried to get it to work with a server cluster and attached JBODs in an SSD(cache)/HDD(capacity) configuration. Everything when together nicely (very easily in fact) but we had a bit of a challenge with the network I/O performance to and from the cluster but transfer rates between the nodes were good. We began researching if RDMA was required but our manager/stakeholder pulled the plug. We responded with many of the points you made here but were told S2D isn’t something to use and that his consultants advised against it.

I disagree with their assessment and I’m in favor of an affordable hyper-converged solution over an expensive SAN. Is there anywhere you know of to research the success of S2D implementations and well-received it is in the industry?

This blog post was plain SS (Storage Spaces, one machine, similar to array of disks), and I have seen it in many big production system running for years with a great success. While S2D is something else, it is like a virtual SAN, consisting of multiple machine cluster presented as one logical storage to outside world, a much more advanced thing than SS. And I personally have never used S2D. I do know some folks who used it though and they say it is good. My opinion is, if you have money for SAN, get SAN. It is definitely more robust than any virtual SAN or S2D. For super speed, combination of local NVMe SSDs inside SS (plain Storage Spaces) works great and super-fast, much faster than any SAN. I have even seen a combination of SAN and Storage Spaces that packs array of local SSDs into few logical drives.

Failing disks – can mirroring/parity in SS really protect your data when a disk crashes? It calls for a test of disk failure!

Yes, it protects your data from 1 drive failure.

not quite complete – the number of additional redundancy can be set with power shell command – so it’s not just a raid5-ish with 1 disk parity – but can also be set to raid6-ish 2 disk parity … or even to a raid7-ish 3 disk parity – but this requires power shell and isn’t available in the gui

it’s pretty much the same as with zfs raidz, raidz2 and raidz3

btw: yes, I know there’s no real raid-level 7 – but I also know it’s often used as a term for “raid5/6 with 3 parities”

You brought up a great point, which is the possibility of replacing a healthy drive. I assume your answer is “no” but I have to ask… Does Storage Spaces make finding failed drives harder in some way ? I assume the light on the hard drive tray will still blink, indicating which drive needs to be replaced.

Also, I have a question about IOPS in a mirrored configuration (in my case, 10 drives mirrored via SS, so I assume that Windows will give it 5 columns). Will the IOPS be similar to hardware RAID ? Can or should I set it all up using REFS instead of NTFS (I will already have the OS on seperate U.2 drives which will be NTFS for booting into Windows) ? I’ll be using a bare metal build BTW (no virtualization at all).

Storage Spaces supports drive-identification lights. It is good to test to make sure (also try Enable-PhysicalDiskIndication in powershell): https://social.technet.microsoft.com/wiki/contents/articles/17947.how-storage-spaces-responds-to-errors-on-physical-disks.aspx

IOPS should be similar to hardware RAID. We can assume what configuration works well, but measuring few most promising configurations and settings will give us numbers (actual IOPS) and reveal what is really the best.

Because final result depends not just on disks, but other components play their role like motherboard (available total bandwidth for U.2 disks limits total possible MBps), drivers, even the CPU. With 10 U.2 disks a very good speed should be achieved in both IOPS and MBps, read and write. What is “the best” also depends on the workload: will it be mostly read, mostly write, will it be random small chunks, or big file reads from start to finish, how many concurrent readers/writers are expected, are there any minimal expectations on latency, MBps, IOPS, etc. NTFS definitely.

Storage Spaces corrupted all of the data on my parity array. I lost everything and it was a known issue that they hid. Storage spaces is hands down the most dangerous way to “protect” your data. This entire article should be removed so as not to persuade people to trust Microsoft with their data.

That sounds horrible Evan, sorry to hear that. The issue you are referring to is I assume this one: https://support.microsoft.com/en-us/topic/workaround-and-recovery-steps-for-issue-with-some-parity-storage-spaces-after-updating-to-windows-10-version-2004-and-windows-server-version-2004-0337f14e-579e-4976-74d9-7acff8aab50e

and https://support.microsoft.com/en-us/topic/issue-with-some-parity-storage-spaces-after-updating-to-windows-10-version-2004-and-windows-server-version-2004-dff27583-e9ee-2cd5-1107-f455771c30fe

Unfortunately, RAID or any “redundant” storage can fail due to software or hardware error. That “redundancy” is not a replacement for backup. Anything important should have at least one backup copy on a separate device (not on the same device). I personally lost files stored in public cloud provider (not to name which one, super-popular one, but could be any, files gone including history). After that, I create backup copies even of files that are on the cloud drive, and I hear people lost data on bi SANs after firmware update – no place is completely safe. Unfortunately, we can only mitigate the risk with at least one backup location for important stuff.

Hi thanks for the good article, I just want to make sure for my scenario & storage space feature. Let say I’ve 8 slot storage in one server. 2 slot use for OS with mirror and 6 left with composition 3 HDD (@6TB) & 3 SSD (@1,9 TB). For safety, can I use all storage slot with that composition or only use 3 HDD & 2 SSD, and left 1 slot for hot-spare ?

I’m afraid about the accident with tiering on Windows 2012 R2 when all storage slot are full and there’s a failure on one disk and we can’t replace it online. So, I must take the data from backup and recreate the pool after replace the failure disk.

Can you give me suggestion or best practice about using storage space slot in my server for safety when there’s a disk failure and I can replace it online ?

Is it possible to use all slot storage or left 1 slot to hot-spare ?

Thank you.

You do not have to have a hot spare. If one disk fails, if there is enough free space on other drives to rebuild parity/mirror, it will be automatically rebuilt. If not enough space, you will have no redundancy until disk is replaced. Please do have a backup of important stuff. RAID or any type of redundancy is not a replacement for backups – to a different machine at least.

>> If not enough space, you will have no redundancy until disk is replaced.

Okay so, the best practice is use all the storage slot for maximum capacity, right ?

But if I want to replace the one disk that going to bad or broke with full slot condition like you said, is it possible to replace while the server still online without any hot-spare ? Maybe if you’ve article about it please share with me. Because until now, SS still become mystery for me.

I agree with you, backup is very very important !

Last night, found something weird with my pool.

Create pool from 22 SSD @960GB get the allocated size become 17,67 TB. Create virtualdisk with parity thin provision (maximum capacity) so the size become 14,5 TB Virtual Disk and create volume on it become 14,5 TB too, after several month the SS detect only 7TB, when check the free size, it still have another 7TB freespace, it make some VM critical-stop LoL and I’ve no idea right now how to troubleshoot it. Just remove some data to make the data under 7TB and the VM can running normally again.

https://tinypng.com/web/output/dn22nvj633k2da0f96c52r181ugk1xtd/Bug%20Storage%20Space%20Pool.png

Yes, you can put all disks to use (no hot spare), and as long as TOTAL free space is > 1 disk capacity, rebuild will start automatically after disk loss, the same as having a hot spare, but it will use available capacity from all other disks to regain redundancy. Replacing a disk is possible “online” if hardware does not forbid so, but the disk first needs to be in the required state for replacement: “Retired”. Example is here: https://www.tech-coffee.net/real-case-storage-spaces-direct-physical-disk-replacement/

Allocated space of the pool is not the same as allocated space of all VDs. Eg. 1 VD mirror of 100GB capacity will take eg. 201 GB space on the pool (2x100GB + overhead for metadata). If VD is “thin” then it will dynamically allocate space as data grows. Capacity of “thin” is deceiving, it is easy to overprovision and get VD into “read-only” state, that is why pool capacity needs to be closely monitored. But VD will NEVER shrink. Shrink is not possible, you need to create a new smaller VD, copy data over, drop old VD.

This is a great article! Even for a marginally technical person it is really helped.

I do have a question that maybe someone can answer. Over time as my storage needs increased (I now am doing 360 videos, for instance) I rather haphazardly bought additional external drives and ultimately ran out of USB connections on my PC, so I bought an “Amazon Basics USB-C 3.1 7-Port Hub with Power Adapter – 36W Powered (12V/3A)”, connected it to a USB-C 3.1 port. I then connected four drives to it that I mainly use for long-term storage.

I recently decided to set up storage spaces so I set it up using two directly-connected 8TB external drives. Works great. When I tried to add some of the drives attached to the 7-port hub, however, they won’t connect to the storage spaces. I get an error message that says “Can’t add drives. Check the drive connections, and then try again. The request is not supported (0x00000032).” I suspect the issue is that the drives I’m trying to add are connected through the USB hub. Any thoughts on this?

Storage Spaces is sometimes stubborn in accepting certain disks, but that is often solvable. Here is a quick google-fu on your error, let me know if that helped: https://answers.microsoft.com/en-us/windows/forum/all/storage-spaces-win-10-cant-add-new-drive-error/eca10bd6-5564-45a0-9649-d00619a68fe6

Sorry for not getting back to you sooner, Vedran, but I wanted to try some things first. Unfortunately, the link you gave me didn’t help my situation. Since my problem involved trying to add drives that were attached to a USB hub I figured out how to free up one USB connector on the PC and attached an 8TB drive to it – giving me 3 directly connected 8TB drives: two in my existing pool and one that I wanted to add. I had the same problem. After some more google searching I found this article, which ultimately solved the problem of the new drive not being able to connect into the pool: https://answers.microsoft.com/en-us/windows/forum/all/windows-10-giving-cant-create-the-pool-and-check/4d841cc6-f48c-48b8-bd05-0a42f116df84?page=1 For anyone that might be interested, the solution came from “Duct Tape Dude” and was simply to disconnect then reconnect the drive. I’m not sure why but it worked. I’ve not tried adding any of the USB-hub connected drives (I’m still trying to figure out my ultimate strategy) so I’m not sure if this would allow them to connect, also, but at least for now all is working. Anyway, I greatly appreciate your article and your help, Vedran!

Storage space direct and storage spaces. Well I would not recommend using them if you have an actual raid controller. It’s great if you want cheap storage yes and has many options but the read and write to the drives is slower than my old Pentium 4 running an SSD.

I have a Intel raid on PCI express 4.0 and my read write on 4 SSD’s in raid 5 is doing over 40 GB/s read and write. A standard Qnap with 8 drives can get over 3000 mb/s easy with SAS 10k’s. I started a new Job working in IT Support and the System admin here Had an actual raid controller but instead used it as a JBOD then setup storage spaces direct. He has a 78 TB cluster on it and about 20 mapped drives. He even added 4 SSD drives into the mix to try and improve the speed. The average read speeds is about 800 mb/s and the average write speeds is 170 mb/s thats using MS dskspd tests.

It’s quite sad My Old WDC raptor drive by itself gets better write speed 220 mb/s on a single drive. Of course my system admin boss believes everything Microsoft tells him. I tried to show him the prove it sucks and he just said and I quote “Microsoft told me it should be super fast”

Yep, agreed that if you do have a decent RAID controller – use it! Storage Spaces in Parity mode (aka RAID5) is known to have super-slow writes. That is why I do not use that mode, except maybe for archiving. I use fast modes, like no parity or mirroring, they achieve speeds of 5000 MB/s and higher, in both reads and writes, on decent hardware, when configured correctly (column count!). Anyone can tell whatever, but measuring is the only truth, and measured numbers speak louder than words. So I don’t know why one would rely on words more than on actual measured speed results.

On 6. myth, you said:

Each expand seems to take some space (around 250MB).

Yup, it’s true. And one expansion takes exactly 256 MB, or 1 base interleave size. And this space can be recovered by this command:

optimize-volume -driveletter [drive-letter-of-storage-spaces-volume] -analyze -verbose -retrim -slabconsolidate